When you want to run your application in AWS, the sheer number of services can make it difficult to understand where your security responsibilities lie in the cloud. The AWS Shared responsibility Model documents these responsibilities and provides some guidance with regards to securing your cloud application. In this post, I will explain the intricacies of the model through a series of practical examples.

The basics

Let’s start with the basics. The AWS Shared Responsibility Model consists of two types of responsibilities: security of the cloud, and security in the cloud.

In theory, AWS is only responsible for security of the cloud: in other words, protecting the physical security of the infrastructure and facilities of the platform. The customer, on the other hand, should make sure that the application running on the platform itself is sufficiently secure.

In practice, the exact responsibilities can change greatly depending on the service being used! This can make the model difficult to understand. To make things easier, we can roughly classify AWS services as non-managed (also known as “Infrastructure as a Service”), managed, or serverless.

Below, I’ll explain how the model works in practice by looking at four ways of hosting an application: AWS EC2, AWS Elastic Container Service, AWS Fargate, and AWS Lambda.

Non-managed services: AWS EC2

Let’s say that we want to build a scalable application that can be highly customized to our liking. A solution could be to run it in a container on our own Kubernetes cluster in AWS using EC2, making Kubernetes scale containers up as our application receives more traffic.

A benefit of a self-hosted platform like this is thus that you have complete control where and how your containers are run. The central component that handles the configuration of our container orchestration is called the control plane. As we will see, AWS services have control planes as well.

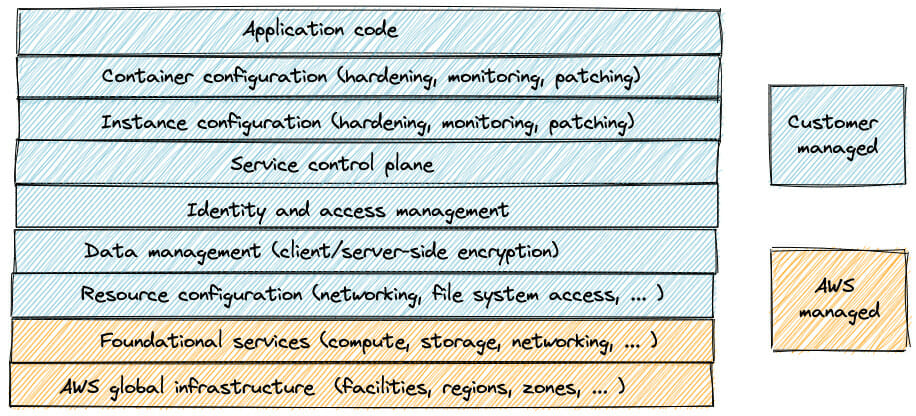

Let’s take a look at what this means for the shared responsibility model:

In a fully self-managed cluster, AWS is only responsible for securing two things: the data center facilities (in the form of regions and availability zones) and the underlying server hardware on which our application runs. Everything else – which, as you can see, is quite a lot! – we must manage on our own.

Managed services: AWS ECS

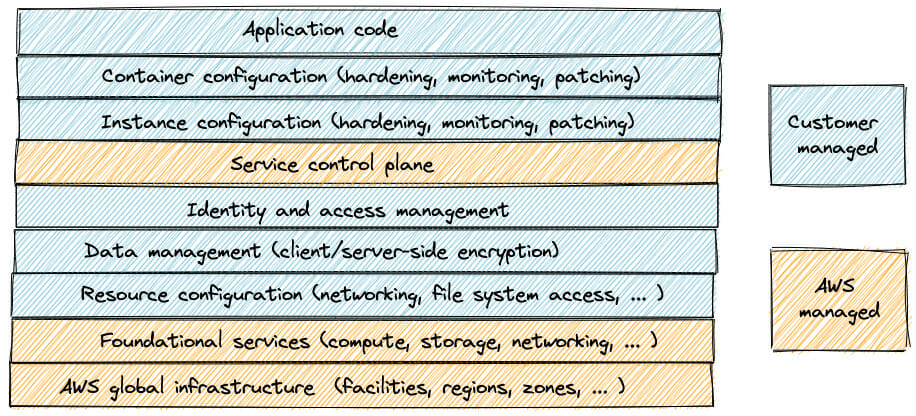

Since it requires quite a lot of effort to set up and maintain our own orchestration framework, we decide that we want to let AWS take care this. A managed service such as Elastic Container Service (ECS) can help with this. Our shared responsibility model will look like the following:

AWS now assumes the responsibility of preventing malicious users from accessing our control plane and ensure that the right number of instances are available. Great!

However, if a vulnerability is found for the underlying operating system of our instances, we still have to fix this ourselves! What if we don’t want to do that? Luckily, AWS provides additional services to handle patch management.

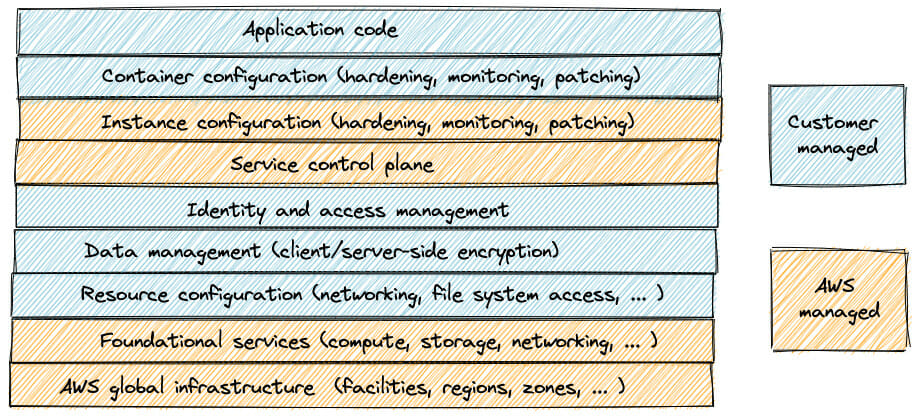

Serverless: AWS Fargate

One option would be to enable AWS Fargate for our ECS cluster. Because pricing is based on CPU and memory usage rather than EC2 instance count, AWS calls this model “Serverless”. The exact configuration of our servers is now in the hands of AWS, and we no longer have to worry about maintaining the operating systems of our servers. Our model looks like this:

One of the benefits of Fargate is that patches for operating system vulnerabilities are automatically deployed. For example, when the Spectre and Meltdown vulnerabilities were published, AWS customers who used a non-managed or managed container orchestration solution had to patch their systems themselves, whereas AWS engineers automatically resolved this issue for customers using AWS Fargate.

Serverless: AWS Lambda

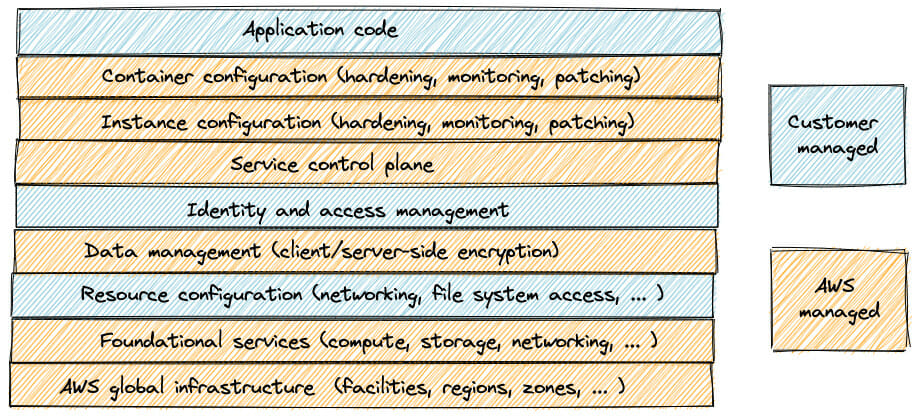

However, even with a service like Fargate, we are still responsible for managing our containers! If we are using a containerization service like Docker, it is our responsibility to maintain and update the container software on which our application is run. With AWS Lambda, we can transfer even more responsibilities to AWS. The final model looks like this:

So how do Lambda functions work under the hood? In simple terms, AWS Lambda has a control plane and a data plane. The control plane is the management API that handles all the calls for creating and updating the Lambda function code. The data plane (also called the Invoke API) is responsible for allocating your function’s execution environment to an available Lambda worker instance.

Lambda workers are EC2 Nitro instances that are backed by an EC2 instance store for temporary storage. These are very similar to the worker nodes that we saw in the self-hosted and fully managed service models. For every Lambda execution environment (which can be reused by multiple invocations), a hypervisor running on one of the Lambda workers initiates a Firecracker VM.

Note that multiple Firecracker VMs from different AWS customers can run on a single EC2 instance! Because Firecracker handles the isolation between the different execution environments that are concurrently running, AWS now assumes the responsibility to identify and resolve security issues related to the container layer, such as breakout vulnerabilities.

Lambda users are therefore responsible for three things: the security of the application code (you can think of patching vulnerabilities such as log4j), the resource configuration of the Lambda (subnets, file system access, triggers and so on), and the Identity and Access Management (IAM) policy associated with the function.

Takeaways

As we have seen, your security responsibilities in the cloud can vary considerably depending on the service that you choose. It is easy to see how the official AWS documentation can lead to confusion as well: even though Fargate and Lambda are both called “Serverless”, our security responsibilities are not identical!

In the end, each AWS service has its own set of shared responsibilities. If you are designing your application architecture in AWS and you want to take the shared responsibility model into account, it can be helpful to ask yourself the following question: how much resources do have at my disposal to properly secure my application by myself?