“Every company is a data company”. That’s why in most companies, trusted data, like PII or financial data, is processed. To improve a company’s service or profitability, this data will often be used with machine learning algorithms to detect patterns. For data scientists, it’s a big asset to train machine learning models securely with production data. It isn’t easy to do this securely and is therefore often rejected by security and privacy specialists in companies. The biggest fears are the risk of data exfiltration and the lack of oversight in what data is accessed by whom, where and when. This can be avoided by placing Vertex AI Workbenches within VPC Service Controls.

In this blog I’ll show how VPC Service Controls can be used to prevent data exfiltration and apply fine-grained access controls for Vertex AI Workbenches. These so-called Service Perimeters are designed specifically to limit access to Google’s APIs and prevent data from being extracted from an environment. For the data scientists Vertex AI Workbench instances are employed. Audit logging will be done using personalized service accounts. I will show you how this can be done in a high-level overview.

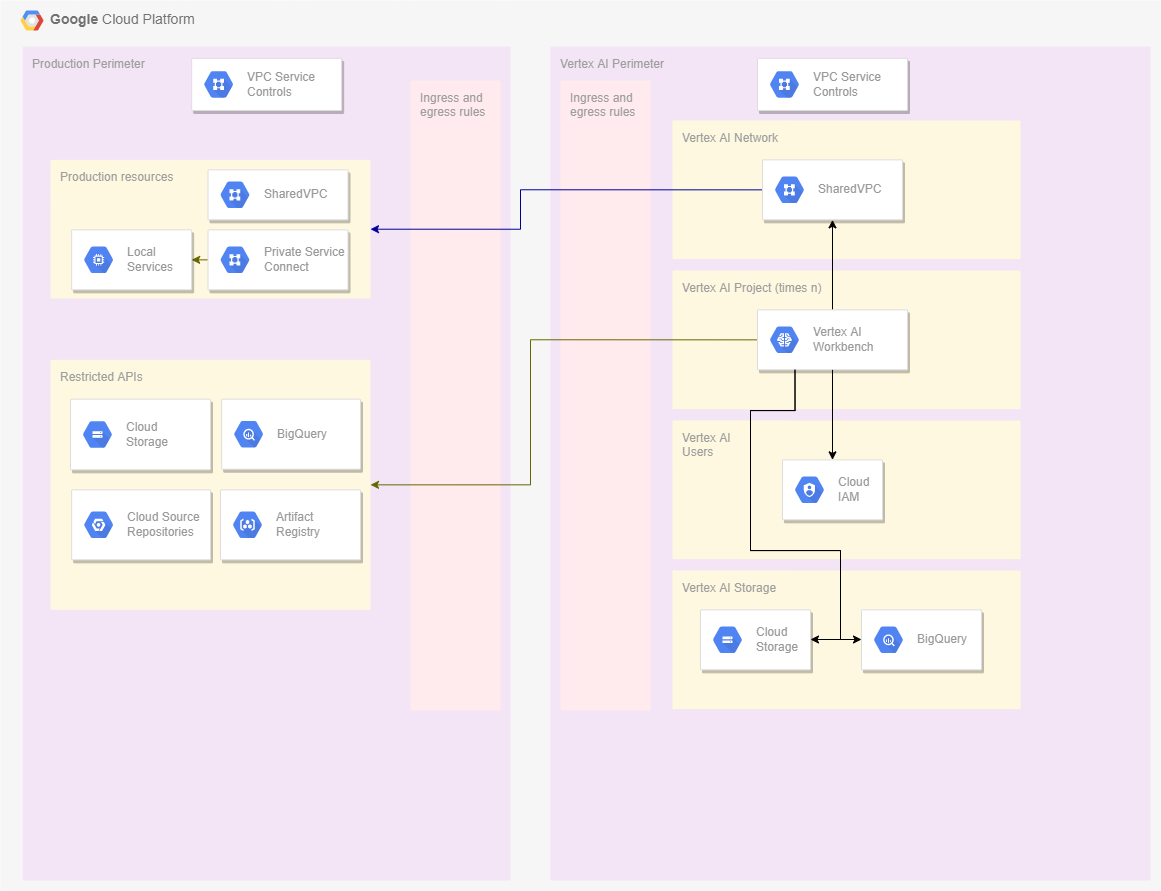

Reference design

This reference design will give you a high level impression of the infrastructure that will be discussed.

Our toolbox

VPC Service Controls

What are VPC Service Controls?

The concept of

VPC Service Control (also referred to as Security Perimeters or just perimeters) deserves its own blog posting, but to give you a general idea:

A couple of years back, Google introduced a flag at the subnet level called “Private Google Access”. This feature allowed applications that didn’t have an external IP address assigned to call the Google APIs. This feature opened a gateway to all Google APIs from applications that were otherwise closed off from the internet.

There are situations where you want to limit what APIs can be called from an application. Google has created VPC Service Controls to enable this, providing means to set up a virtual perimeter around these APIs. Setting up such a perimeter, made it possible to restrict which APIs could and which couldn’t be called. These VPC Service Controls can be enabled at GCP organization level. Scoped policy configuration can then be delegated to folder-level and project-level administrators.

Why should you use VPC Service Controls

Google has described the substantiation of the development of VPC Service Controls in their documentation. In short there were a couple of motives for this. Implementing VPC Service Controls allows for a more strict isolation of networks and services and actions that can be performed from within these networks. This means that data can be stored within it’s own service plane, where rules can be applied on data transactions and what can be done with it. To make sure that resources can still reach certain Google APIs there are two special CIDR-ranges assigned to the APIs named private.googleapis.com and restricted.googleapis.com.

Vertex AI Workbench

With Vertex AI Workbench Google provides a managed set of tools that enable data scientists to rapidly develop machine learning models. It is a rebrand and previously known as AI Platform. It provides a full set of tools for data scientists to do their jobs, including a JupyterLab proxy service for rapid prototyping.

Private Service Connects

With Private Service Connects a connection can be made from resources in one perimeter to self managed resources in another perimeter. This is necessary because we won’t have an internet connection on the data science side and requests therefore can’t traverse the internet.

Personalized Service Accounts

To allow proper auditing of data access we need a clear way to identify the person who is making the request. Since all requests coming from a machine are identified by the service account, it can be hard to identify the actual person behind the request when coming from within the JupyterLab proxy. Therefore we will be using personalized service accounts that are one-to-one linked to a physical person.

Step 1: Set up Service Perimeters

What VPC Service Controls will we be needing

For this use case we need two Service Controls. One for all our production services, where the data is also stored securely. Another where the Vertex AI Workbench instances can be deployed. This last one will only need access to specific services from the production perimeter, but cannot have any outside world access. Be aware that each perimeter should have it’s own SharedVPC. All resources connected to a network are considered to belong to the same perimeter. This can cause a lot of confusion if done incorrectly.

Configure VPC Service Controls

Production perimeter

First create a VPC Service Control that will contain your production resources. Especially in an already existing environment, this will be one of the most sensitive parts. Pay close attention that your current services can still connect to the required APIs after being configured. This is especially the case for your resources like BigQuery and Cloud Storage, for which you need to restrict the access. Configure it such that all projects belonging to this perimeter have full access to these resources. It’s possible to include specific projects into your perimeter, but it will be easier to configure it to include entire folders. This is easiest when you have structured your GCP organization correctly using folders.

AI perimeter

Next up is the AI perimeter. For this configure a similar setup as for the production perimeter. Because this will usually be a new perimeter, this won’t be as challenging as the production perimeter. Again pay attention to your policies and configure them according to your company’s requirements. This perimeter should not have an internet gateway. Enforce this by setting up the proper firewall rules and configuring the right policy constraints.

To still allow the resources within this perimeter to access the right APIs, configure routing to the Google APIs CIDR ranges. Do this by following the steps as dictated in Google’s documentation. Do note that the CIDR ranges for the restricted APIs are assigned as 199.36.153.4/30 and the private APIs are assigned as 199.36.153.8/30. This is not clearly mentioned in this documentation.

Storage

The data scientists will probably want to store intermediate models and work with the data within the perimeter. For this add Big Query datasets and Google Cloud Storage within the perimeter itself. These storage resources should obviously have the possibility to read and write, opposed to the policy set for the production perimeter.

Important note

Pay close attention to the access levels and access policies. If you fail to do this properly, you will notice that specific resources cannot be accessed correctly. The worst case is that you will experience failures in your production services, but it can also result in annoying HTTP 401 or 403 errors when trying to access the Jupyter notebook proxy that you will set up later.

Step 2: Configure access between perimeters

There are two ways to grant access from the AI perimeter to the Production perimeter. One way will be by setting up a Perimeter Bridge. You can look at it as another perimeter that is used as an overlay across the perimeter projects that need access to each other. The other way is by specifying egress and ingress rules. A Perimeter Bridge can be sensible if you have a fixed set of projects. A bridge also needs to be configured at the organization level. For this blog the alternative solution of Ingress and Egress rules will be used. This is because they allow for a more granular configuration of the desired policies. This also provides the option to use Private Service Connect to self managed services.

Egress from AI Perimeter

Create an egressFrom rule from the projects in your AI perimeter. Grant this access to ANY_SERVICE_ACCOUNT. Then specify the egressTo where you list the projects containing your Cloud Storage and BigQuery production datasets. Also, describe the allowed operations. Since you will probably only want to allow read access on your production perimeter, you must specify the operations accordingly.

Ingress on Production Perimeter

The previous egress rule only described the allowed traffic from the perspective of the AI perimeter. Now create a similar rule to allow the actual ingress on the production perimeter. You can use the same description as before, but in this case, use the ingressFrom and ingressTo rules. Here you also need to ensure that only read access is allowed for data inside production. Additionally, you can choose to apply tooling to remove PII data with a high impact level (NIST – PII – Impact Level Definitions).

Private Service Connect

Often some resources still need to be accessible, especially from a secured perimeter. An example can be a private Artifactory where only allowed packages are hosted. Configure a Private Service Connect so that you can access the required resources.

Step 3: Create personal service accounts

For proper access control and audit logging, you need a way to control who has access to what and when. In a static environment access to specific data can be assigned manually for the default service accounts. In most cases, however, the projects will come and go, and so do employees. To solve this, we will centrally manage the service accounts.

Setting up the service accounts

This is typically the easiest step, depending on how your users are managed. In many companies a system like Google Workspace or LDAP is used for this. From your user management system, you will need to generate a service account for each user that wants to take advantage of the data science tools. There is a caveat: the default quota per project for the number of service accounts is set to 100. If you have more users, you need to request a quota increase. Also, ensure that these service accounts are maintained frequently to avoid persistent accounts for employees that no longer should have access. These service accounts can best be created and managed in a centralized project within the perimeter. You should be able to use the same project as your SharedVPC for this.

Configure the access for the service accounts

There are two types of access that you now need to apply. The first is the access to the data, and the second is who can access which service account.

Data access

This step is typically manual. Someone with the privilege to assign access to datasets should allow specific access for each of the created service accounts. This should only be set to read access and on the required resources in the production perimeter.

Impersonation

Next specify the user that can impersonate each service account. If this information is stored correctly in identity management (e.g. Google Workspace or LDAP), you can automate this in the same step as creating and managing the service accounts. Otherwise, you will need to manually do this, which can become cumbersome soon. Grant two permissions for each user on the personal service accounts: Service Account User and Service Account Token Creator. This should be done in the project where the service accounts are located.

Step 4: Create Vertex AI Workbench in Secured Perimeter

We’re finally at the fun part: creating the Vertex AI Workbench instances in projects within the newly created AI Perimeter.

Access: user-owned or service account impersonation

There are two ways to restrict access to Vertex AI Workbench instances. It can be user-owned or accessible by anyone who can impersonate the connected service account. Remember that the service account is always required, so the additional user is optional.

It’s important to understand that requests from any GCP resources using the GCloud SDK tools (gcloud, gsutil, bq etc.) will always use the connected service account. However, this can be overwritten by setting service account impersonation as the default in the gcloud config within the Workbench. Also, note that the bq command doesn’t allow for service account impersonation. This will require calling the API directly for now.

Step 5: Lifecycle Management

I will keep this short and leave the implementation up to the engineers. With lifecycle management we want to make sure that notebook instances won’t be eternally living resources. Reasons can be because of possible usage of PII data that should be removed within a specific amount of time (right to be forgotten). Also the cost of long running instances will rise dramatically if not managed properly. It is wise to get the users aware of the price tag and provide the option to stop machines when not being actively used. If a machine should be removed without messing too much with statefiles from tools like Terraform, you might just want to stop the machine and detach the bootdisk. Then the resource can safely be removed in a later run without the risk of it being restarted in the meantime.

Conclusion

This blog described how a company can allow data scientists to train machine learning models securely with production data. Getting this done is a complex task consisting of a combination of techniques. Teams implementing this strategy should be in close contact to ensure that priorities and actions are continuously aligned.

Once done, a data scientist should be able to get its Vertex AI Workbench instance training machine learning models with production data, within the confines of a security perimeter.

(Featured image by Pietro Jeng at Unspash)